![]() How well did I design this lesson? I think it’s in good shape, for I’ve polished and PDCA’ed many times over the years. Could I find out how well students have prepared for a lesson? How long did they spend? Where did they struggle? Could I discover external events – NMC or otherwise – that affected many students at once? After a lesson, can students summarize key points? Did they take their learning seriously? What do they think about their learning process itself? I’ve often wanted answers to these and similar questions, sometimes right away, sometimes a week or a semester later.

How well did I design this lesson? I think it’s in good shape, for I’ve polished and PDCA’ed many times over the years. Could I find out how well students have prepared for a lesson? How long did they spend? Where did they struggle? Could I discover external events – NMC or otherwise – that affected many students at once? After a lesson, can students summarize key points? Did they take their learning seriously? What do they think about their learning process itself? I’ve often wanted answers to these and similar questions, sometimes right away, sometimes a week or a semester later.

Zoomerang – purchased later by SurveyMonkey – was useful for formal surveys, but in my opinion, the software tool was overly complex and too labor-intensive for day-to-day use. I looked at Moodle’s Survey module; it was not configurable for my own questions. I tried Moodle’s Choice module, an easy way to make one-question multiple-choice surveys. (I now use Choices regularly, but unfortunately, the feature does not offer open-ended questions or multiple-question surveys.) Clickers and PollEverywhere offer multiple questions, but they, too, are less applicable for reflective questioning.

Media Technologies folks knew of my need, so when Moodle added their Feedback module, I was encouraged to try it out. Within an hour I was hooked; I now include Feedback activities in most lessons in a few of my courses, and I consider Feedback to be one of the most positive features of Moodle. As with quizzes, there are several types of questions a Feedback activity can include – multiple choice, multiple answer, numeric answer, short essay, etc. This variety is helpful in forming pre-assessment, mid-lesson course correction, and meta-cognition surveys.

Construction of surveys is fairly easy. There are a few minor glitches; as in any feature of a course management system, one needs to pay attention to procedure and to practice a bit. Feedback module surveys are fairly easy to replicate and adapt.

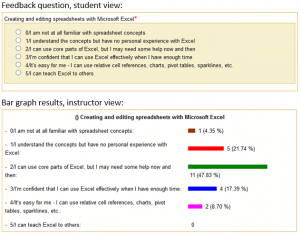

How do Feedback results appear? For multiple-choice and multiple-answer questions, I don’t need to know individual answers; a graphed aggregate, like those in clicker software bar charts, works fine. For example, see the attached question with corresponding results for a pre-assessment question on CIT 100 students’ familiarity with Microsoft Excel. I often share result like these with students so that they understand my purpose for lighter or heavier topic emphasis. Note that answers – even essay style – are usually reviewed anonymously. There is a configuration setting to tell Moodle whether or not to even record students’ names, so if an instructor chooses to do dig deeper, it is not hard to identify authors of responses.

How do Feedback results appear? For multiple-choice and multiple-answer questions, I don’t need to know individual answers; a graphed aggregate, like those in clicker software bar charts, works fine. For example, see the attached question with corresponding results for a pre-assessment question on CIT 100 students’ familiarity with Microsoft Excel. I often share result like these with students so that they understand my purpose for lighter or heavier topic emphasis. Note that answers – even essay style – are usually reviewed anonymously. There is a configuration setting to tell Moodle whether or not to even record students’ names, so if an instructor chooses to do dig deeper, it is not hard to identify authors of responses.

To engage students, would I need to assign points? Surveys are not really a replacement for other graded assignments, but are useful activities that can be graded. When I was considering grading at least some of my surveys, I realized that I would not be willing to add a significant grading burden; few of us can. I chose to assign a nominal number of points – not enough to strongly impact final grades, but enough to motivate participation. Moodle easily displays a list of students who respond and another list of those who do not, so it is easy to use that simple participation measure when entering points into the grade book.

Can I trust the answers wholeheartedly, whether or not the surveys are graded? What does it mean if I can – or if I can’t? In my case, I believe “good enough” truly is sufficient, for Feedback module surveys provide general evidence of preparedness and they encourage meta-cognition, one of the prime aspects of critical thinking.